In 2020, things were going well for the Shep team, a big new partner/client, new client interest, a well-built and maintainable product, an engaged team and a great culture. All the things you want in a start-up. But, there was just one problem.

From its home in Austin, TX, Shep aims to improve what’s called “duty of care” and “travel booking leakage” for corporations.

“Duty of care” is basically an employer’s duty to care for traveling employees – it encompasses a lot – vaccines, safety, weather, and legal. As for leakage, large companies negotiate discounts with airlines, hotels, and more. When an employee books something that doesn’t use those negotiated discounts, it’s called “leakage”. Leaked bookings cost companies billions, annually.

Stupid Pandemic

In case it wasn’t already clear, both of Shep’s value propositions require people actually to travel. 2020 was a challenging time as clients began to hold off on investing in new tech to manage travel.

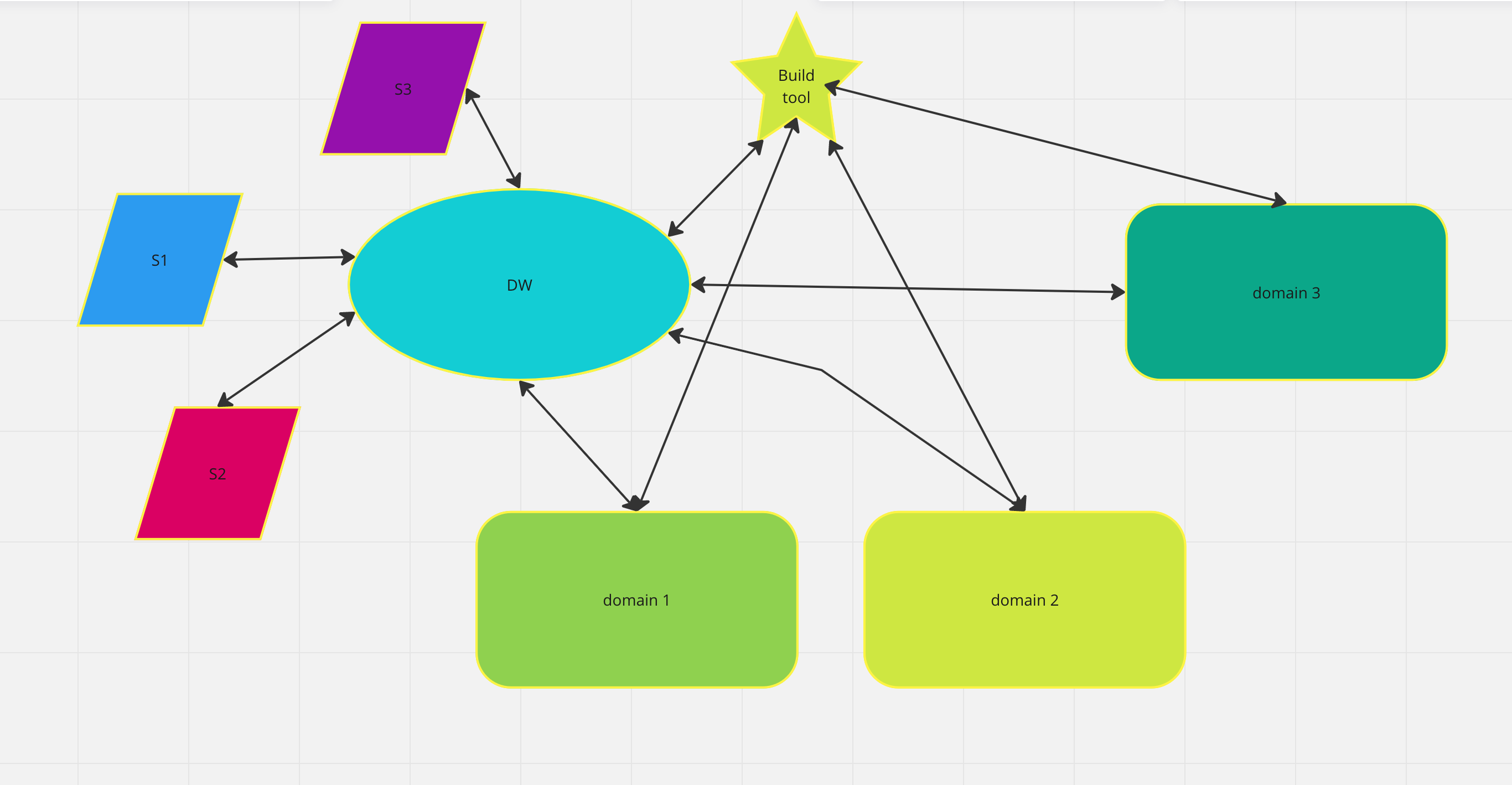

In 2020, Shep’s technology was a browser extension and a server. The browser had and still has the ability to detect when an employee is booking a flight outside of the client’s preferred travel platform. It also detects flight costs and tells the employee if they’re about to spend more than they should. In either case, the browser extension displays a message on a travel website it’s configured to work with. Shep’s extension supported hundreds of sites. However, in order to actually do any of that, the extension has to be programmed with enough details about how each website works. If Joe Coffeepot is about to book a trip on Expedia then the extension must be programmed to understand how the Expedia booking pages are built. Version 1 of the extension was programmed to understand the top few hundred travel websites, including Expedia.

Scrapers gonna scrape

This was maintained by a small team dedicated to identifying when a supported travel website changed its layout and then updating the code to support the change in the extension. Any time a website changed significantly enough, then the extension would no longer work on that website and an update would have to be created and deployed. It was a manual process with constant attrition. It was also the status quo for the industry – sort of an unmentionable best practice. It’s also pretty cringy from a scaling and customer experience perspective.

I started working with Shep in early 2020 to help find a way to innovate out of the downward drift that the pandemic had inflicted and find improvements that would scale with the company.

My hypothesis was that by gathering structural metadata about all travel websites that Shep supported we would have enough quality information to train ML models to determine if a person intended to book travel, where, when, and how.

If that hypothesis proved to be true, then, the team came to realize that we had some amazing opportunities available to us and us alone. We could use those models to do useful and innovative stuff.

- We could reduce or maybe eliminate the code attrition issue.

- We could create new machine learning empowered features that were completely unheard of in the industry. …hint hint.

A Huge Leap Forward

Well, it worked. Version 2 of the extension completely eliminated the code attrition issue and enabled highly context-aware real-time messaging that displays critical information about health – including covid-19 policy, weather, civil, legal, and other issues at the right moment – when the person is booking. Imagine going to book a flight to somewhere for work using Expedia.com and your employer displays a message telling you about the destination’s covid policy and restrictions, passport requirements, and more.

Bye Scraper!

Site-specific code was replaced with trained models hosted on API endpoints that could interpret travel website structure more generally. This evolution immediately brought along support for hundreds of websites that were never previously supported and we didn’t even have to code for them. In fact, in many cases, we didn’t even need to train the machine learning models with these additional websites. The models worked on them, out of the box. Further, these new travel data points could be rolled up into a beautiful dashboard and provided to managers.

The lock had been picked.

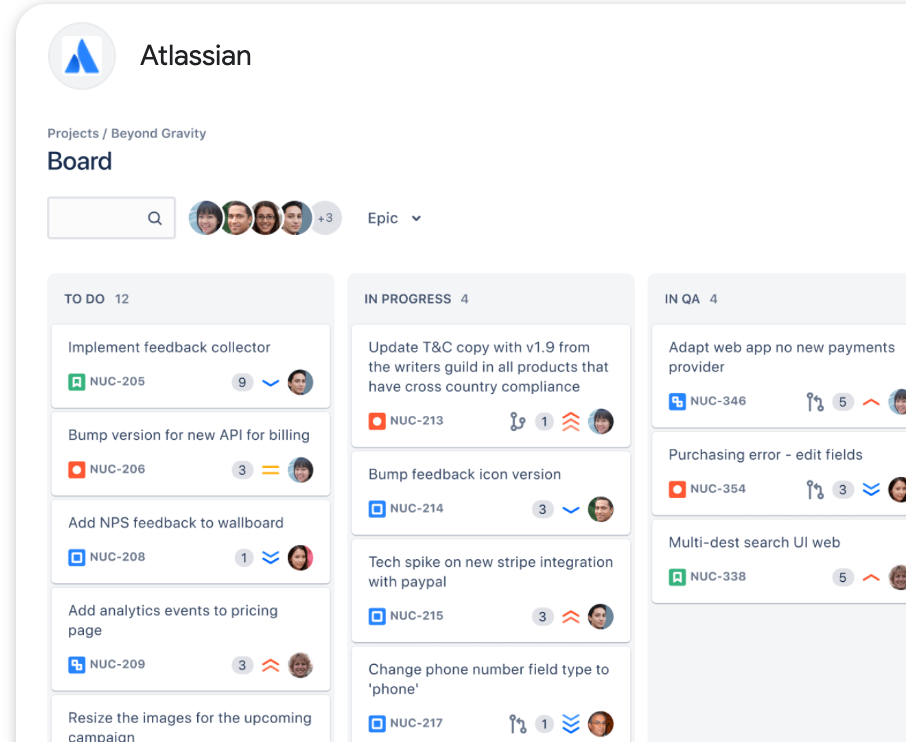

Since the team was no longer in the code attrition business, they could focus on really impactful work!

Rather suddenly, Shep was a game-changing disruptor in booking leakage and duty of care. Further, real-time context-aware messaging applies broadly and could be used outside travel. Shep was acquired by Flight Center in 2022.

Keep reading to learn a little more about the tech we used to pull this off in months not years.

Shep uses a combination of tech, mostly hosted on AWS. The linchpin is specially configured Snowplow Analytics implementation which collects web page metadata and user interactions into AWS’s Redshift. Data processing is managed with Spark and Map Reduce also on AWS. Dbt Cloud is used to build the reporting models and data sets for training and testing of the machine learning models. The models are deployed through a simple MLOps pipeline build with AWS Lamba, API Gateway, and Sage Maker. This level of engagement, innovation and technical know-how is designed into Signal Scout. It’s who we are.