Overview

Excited to augment your existing product or service with Machine Learning and AI? Fantastic – we’d love to help!

Getting past the hype, Machine Learning and AI (ML/AI) enable organizations to harness and monetize their data in novel, often exhilarating ways. But it requires actual work, starting with the data. This article describes the high-level considerations when preparing your data for ML/AI. We’ll cover feature engineering, data as a service, and data monitoring concepts that will better ensure a successful ML/AI project.

Transactions to Features

Feature engineering transforms raw data into usable “features” representing an important concept like conversion, churn, engagement, or interest. Data are rarely, if ever, ready for ML/AI projects, and we have to prepare data with feature engineering. For text and images, this generally follows consistent, structured ways to represent the data. For tabular or transaction data, the opposite is generally true: there’s many ways to represent the data. So, where to start?

Conceptualization

Let’s say you want to predict conversion from prospect to customer. Ask yourself, “Why do people become my customer?” You might think of things like your landing page, or your marketing campaigns, or the person’s income levels. These are all concepts that can be represented in your data one way or the other. Let’s call this conceptualization: coming up with a conceptual model for why people convert. Some important considerations for this step include:

- Context is everything: what’s special about your data, your product, your value proposition, your business?

- Don’t get hung up on details – that’s the next step!

- Don’t over-edit yourself – even if the specific concept isn’t easily captured, a good proxy can often be found.

Operationalization

Once you nailed down a set of concepts for the ML/AI project, it’s time to define those concepts as they exist in the data: operationalization. For some features, this will be easy and for others not so easy. Take, for example, churn. Most people easily understand the concept of churn, but it’s precise appearance in the data may vary from business to business or even product to product. Maybe churn represents no log in or purchase for a month. Maybe it represents a cancelled subscription. The cancelled subscription is an affirmative act that is easily captured, while waiting for a month requires assumptions about customer behavior and time. It’s important to engage both data experts and subject matter experts about the choices made here. Poor assumptions or choices at this stage will put your ML/AI project at risk and be very difficult to fix later.

Align ML/AI data with your intended use

Finally, your data preparation should reflect the data available when the ML/AI model needs to do its job in production. The data available when a new user hits the landing page is substantially different than the data available after they fill out a form of personal information. So, you’ll not want to train a landing page conversion model using personal information because that data will never be available when the model needs to score the case.

Understanding the business needs play a central role in properly preparing the data for ML/AI, and we know it. We’ll walk you through the steps and engage you and your team until we nail these features down!

Treating Data as a Service

Could you run everything off your transaction database? Sure. Should you? Probably not.

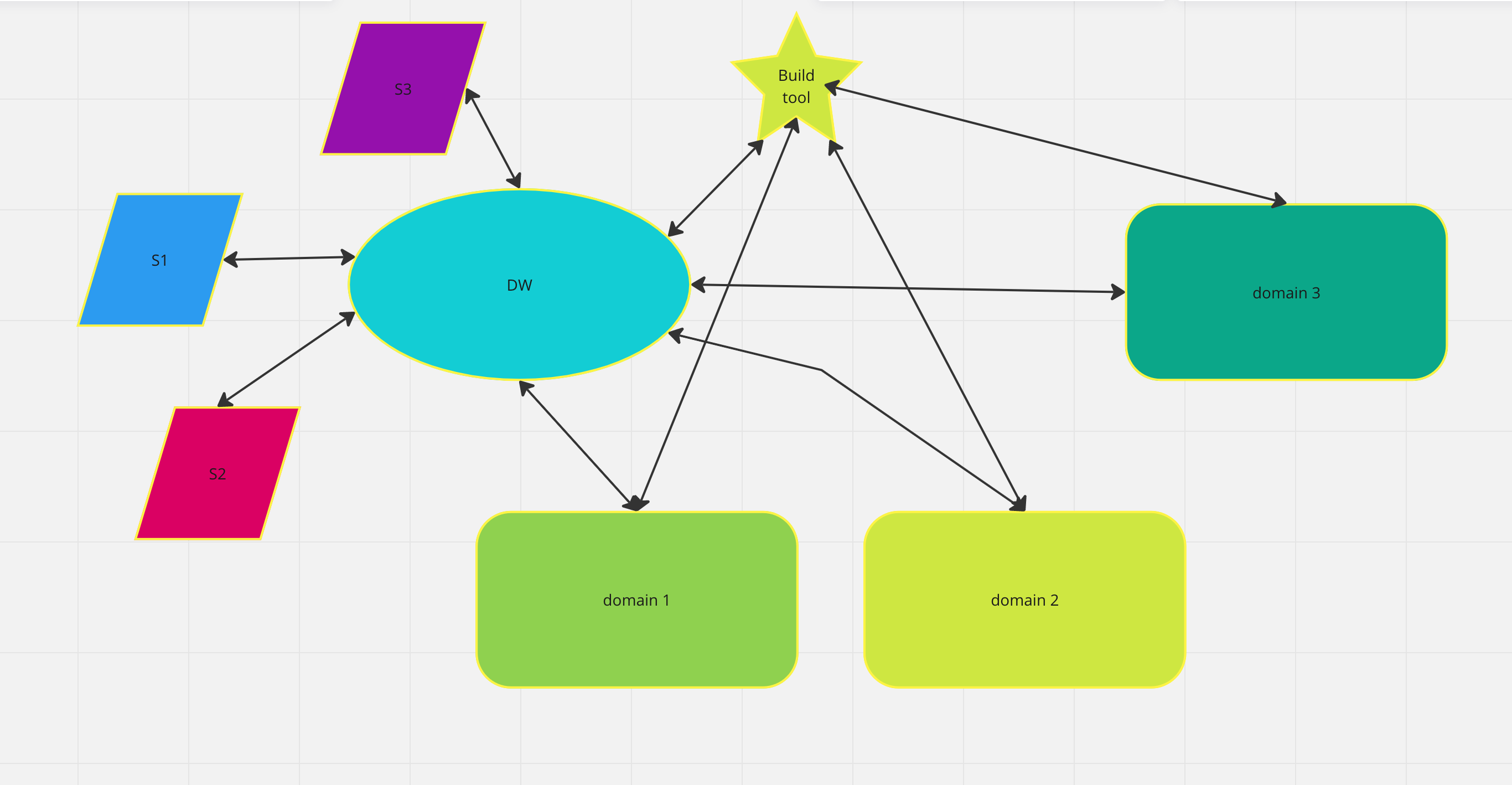

Where to store the data for ML/AI projects should be a relatively simple problem, though there are countless products and services out there to confuse even the most technically savvy. Data warehouse, data lake, and feature store are just new words for databases, but the concept behind them is novel: dedicate resources to consuming data by modern analysts, scientists, and machine learning engineers. Put another way: treat the data as a service.

Data Microservice

While many projects may work fine simply querying a database, you might consider a data microservice for mission-critical ML/AI projects. Making the data accessible to any analyst or engineer can significantly improve a project both in the fitting of an ML/AI model and integrating that model into a larger production code base or product. Keeping everyone on the same data prevents unforced errors and time loss in getting models into production, prevents disagreement of the model’s source of truth, and encourages collaboration between people with different programming languages (R vs Python) or tool kits (Tableau, Excel).

Distributed File Systems

Modern technology stacks provide several ways to represent the data, but few options enable fast, real-time consumption of millions to billions of cases or rows of data. Even a well-tuned, multi-threaded database will grind to a halt once it has to fetch and serialize results. This is when you might want to consider an alternative: a distributed file system or DFS.

Using a DFS, you can store the data in large, distributed collections of files where the data is represented as close to in-memory as they can get. Storing the data this way enables fast reads into memory, yes, but also pre-calculated transformations as they are read into the computer’s memory. That’s a highly technical way of saying: rev up your data engines with a DFS.

In combination with your data service, you can serve up millions and billions of rows in seconds to enable rapid iteration of ML/AI models, rapid review of data analyses, and moderate the impact on the database.

Monitoring Data Early and Often

So you followed all the advice and steps to secure a scalable, productive data set that will make your ML/AI team happy and successful. But do you know what’s going into your models? Getting telemetry or insight into the data used by your ML/AI model is key to ensure long-term success and avoiding bias and drift.

Your ML/AI model will only ever get you the average relationship or effects. I don’t care what model you choose or loss function your write, you really shouldn’t expect anything beyond the average of what’s already in your data. So, you need to know what’s in that data!

Why we monitor data

Monitoring data should be built into every modern ML/AI project. Just because you nailed down your conceptualization and operationalization doesn’t mean things won’t change. Concepts tend to drift over time due to changing behavior, changing products, or changing external forces. What is true when you trained a model one year, may not be true the next.

If there is bias in your data, you can rest assured there will be bias in your ML/AI model. Monitoring for bias protects you from potential liability and negative customer experiences. Demographic bias, in particular, is wrong on its face and runs contrary to building ethical ML/AI systems. Unfortunately, bias is not always straight-forward.

Take the example of predicting whether a person will exhaust their unemployment insurance benefits. If we use the county unemployment rate a predictor, that would generally seem like a good idea to most people. However, there are counties covered by specific demographics, including reservations, that suffer historically higher rates of unemployment. Is it fair then to continue using county unemployment rates to make individual predictions? Just because the demographic isn’t obviously used doesn’t mean there isn’t bias.

We have to look underneath our concepts to figure out whether the data is changing or is biased to protect our ML/AI investments.

Using experiements to monitor data

Fortunately, we can use simple experiments or statistics to monitor for these types of issues. Simple A/B testing comparing the data in one moment to the same data at a different moment or comparing two groups of people can easily identify problems in the data. Don’t just assume the data isn’t biased or drifting, test that belief and make corrections as needed.

Conclusion

Getting the data right ensures higher success rates in ML/AI projects but requires investment in expertise and infrastructure. The issues presented here may seem daunting, but getting the right help can ease the pain and get you to meaningful results sooner. Now that you have a better sense of how investing in your data yields better ML/AI products, we’d love to take you on this journey and deliver a well-rounded, data-centric ML/AI product.

Further reading: